DataOps, short for Data Operations, is a revolutionary approach to managing the flow of data across organizations. It combines agile methodologies, DevOps practices, and data engineering principles to improve collaboration, reduce errors, and accelerate delivery of data products. For large enterprises, where data ecosystems are vast and complex, implementing DataOps can mean the difference between innovation and stagnation.

Enterprises dealing with massive data volumes often struggle with slow data delivery, poor governance, and misalignment between data producers and consumers. DataOps addresses these issues by enabling continuous integration and delivery (CI/CD) of data pipelines, automated testing, and better collaboration between teams.

Key Challenges of DataOps at Scale

Organizational Silos

Large organizations often operate in silos, where data engineers, analysts, and business stakeholders rarely interact effectively. These barriers hinder the creation of unified data pipelines and prevent enterprises from gaining actionable insights quickly.

Toolchain Complexity

Modern data stacks involve dozens of tools across ingestion, processing, storage, and analytics. Managing this complexity without a standardized framework can lead to integration failures, escalating costs, and performance issues.

Data Quality and Governance Issues

Data quality is the foundation of reliable analytics. Enterprises face significant challenges in maintaining high standards of quality across distributed data systems. Regulatory compliance further complicates this, requiring robust governance processes and clear data lineage.

Best Practices for Implementing DataOps

Foster Cross-Functional Collaboration

DataOps demands breaking down barriers between teams. Enterprises should establish shared goals and metrics to align data engineering, analytics, and operations teams. This fosters accountability and faster iteration.

Automate Data Workflows with CI/CD

By adopting CI/CD for data pipelines, organizations can shorten development cycles, minimize human errors, and ensure seamless deployments. Automation ensures that changes are tested and rolled out with minimal downtime.

Build Comprehensive Data Quality Frameworks

Integrate tools like Great Expectations or Soda.io to monitor data quality at every stage. Set up automated checks for schema changes, null values, and outliers to avoid data integrity issues.

Adopt Infrastructure as Code (IaC)

Using Terraform or Pulumi, enterprises can manage their data infrastructure like software. IaC enables reproducibility, scalability, and disaster recovery across environments.

Version Control for Data Assets

Just as code is versioned, enterprises must version data schemas, pipelines, and even datasets. This ensures traceability and facilitates rollbacks when issues arise.

Why DataOps Requires Cultural Change

Implementing DataOps isn’t just about adopting new tools-it requires a shift in mindset. Teams must embrace agile principles, value continuous feedback, and work towards shared objectives rather than isolated KPIs.

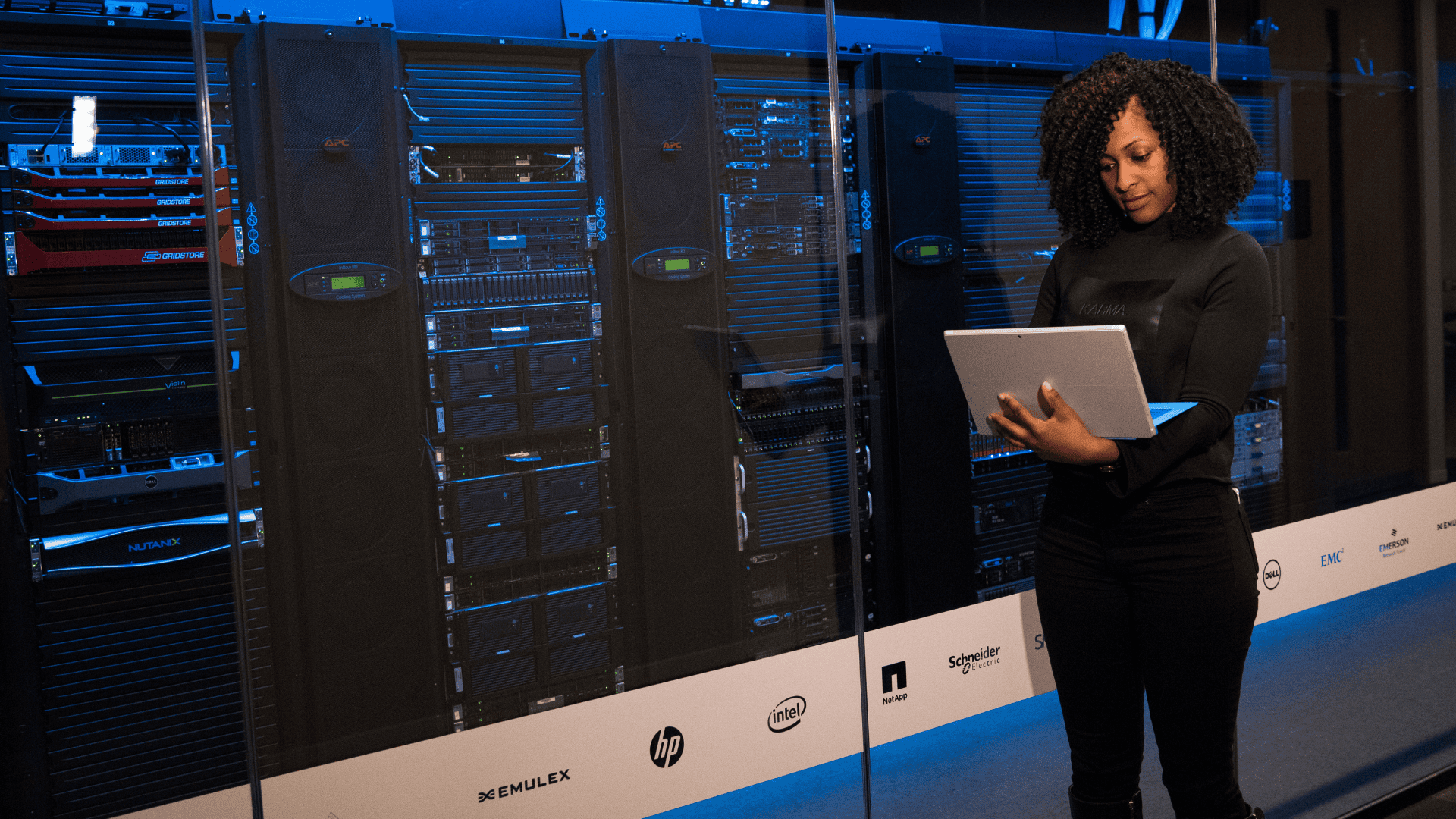

The Role of Cloud and Hybrid Architectures in DataOps

Large enterprises often run hybrid environments, mixing on-premise systems with cloud services. Adopting DataOps practices enables seamless orchestration across these environments, improving scalability and resilience.

Metrics for Measuring DataOps Success

Success in DataOps isn’t abstract. Enterprises should track specific metrics like pipeline deployment frequency, data incident rates, recovery times, and stakeholder satisfaction to assess progress and ROI.

Tools and Technologies That Enable DataOps

| Tool/Technology | Purpose | Example Vendors |

|---|---|---|

| Workflow Orchestration | Automate and monitor pipelines | Apache Airflow, Prefect |

| Data Quality | Ensure accuracy and consistency | Great Expectations, Soda.io |

| CI/CD for Data | Automate testing and deployment | dbt, Jenkins |

| Infrastructure as Code | Manage infrastructure efficiently | Terraform, Pulumi |

| Monitoring & Observability | Real-time tracking of data pipelines | Prometheus, Grafana |

Common Pitfalls to Avoid

- Tool-First Approach: Over-reliance on tools without process and culture transformation leads to limited success.

- Neglecting Governance: Skipping governance in favor of speed exposes enterprises to compliance risks.

- Lack of Observability: Without robust monitoring, issues remain undetected until they cause business disruptions.

- Poor Alignment with Business Goals: Technical improvements must align with clear business outcomes to drive value.

Building a DataOps Culture

DataOps is as much about people as it is about technology. Encouraging a mindset of continuous improvement, agile iterations, and transparent communication is key. Leadership must champion this shift to establish it organization-wide.

Partnering with experts like BigData Boutique can accelerate this journey. As leaders in big data services, they provide strategic consulting and technical expertise to design and implement scalable DataOps practices tailored to your enterprise needs.

Enterprise Success Stories with DataOps

Consider a global retail enterprise that reduced its analytics reporting cycle from 24 hours to real-time by implementing DataOps. With automated data pipelines and quality checks, they improved decision-making speed and accuracy. Another case involves a financial institution that used DataOps to meet stringent compliance requirements while reducing operational costs.

These examples highlight the transformative potential of DataOps when executed effectively.

Future Trends in DataOps for Enterprises

As data landscapes evolve, enterprises must prepare for trends such as AI-powered data operations, greater emphasis on data privacy, and the rise of self-service data platforms.

Conclusion

DataOps is no longer optional for large enterprises seeking to stay competitive in a data-driven world. It brings the agility, reliability, and governance needed to turn complex data ecosystems into strategic assets. By following these best practices-collaboration, automation, quality assurance, and governance-enterprises can build resilient and future-ready data platforms.

To accelerate your DataOps journey and unlock the full potential of your data ecosystem, partner with BigData Boutique. Their comprehensive big data services enable organizations to navigate complexity and achieve operational excellence at scale.

Peyman Khosravani is a global blockchain and digital transformation expert with a passion for marketing, futuristic ideas, analytics insights, startup businesses, and effective communications. He has extensive experience in blockchain and DeFi projects and is committed to using technology to bring justice and fairness to society and promote freedom. Peyman has worked with international organizations to improve digital transformation strategies and data-gathering strategies that help identify customer touchpoints and sources of data that tell the story of what is happening. With his expertise in blockchain, digital transformation, marketing, analytics insights, startup businesses, and effective communications, Peyman is dedicated to helping businesses succeed in the digital age. He believes that technology can be used as a tool for positive change in the world.