What defines “modern” 3D animation in sports games?

Modern 3D animation in sports games is defined by a high level of realism, fluidity, and responsiveness that blurs the line between game and live sports broadcast. This means player movements are captured and reproduced with incredible fidelity – think of soccer players dribbling or basketball players pivoting in ways that mirror real athlete biomechanics. A key marker of modern animation is the integration of motion capture and machine learning to produce animations. For instance, EA’s FIFA series introduced HyperMotion technology, which uses full-team motion capture data plus ML to generate thousands of new animations, resulting in players moving more naturally than ever. Modern animations also involve procedural blending: instead of discrete canned motions, animations blend on the fly to adapt to gameplay context (like a player reaching for a ball at various heights with smooth transitions). Foot planting is properly handled (players’ feet realistically stick to the ground when needed rather than sliding) – an essential aspect that older games struggled with but modern animations have solved via inverse kinematics. Another defining feature is attention to sports-specific details: jersey tugging, subtle fakes and feints, follow-through after shots – these nuanced animations add authenticity. In sum, “modern” sports game animation is about capturing the athleticism and unique movements of players in a believable way, aided by cutting-edge tech (mocap, ML) to achieve both realism and a huge variety of animations so that gameplay looks as dynamic as a real match.

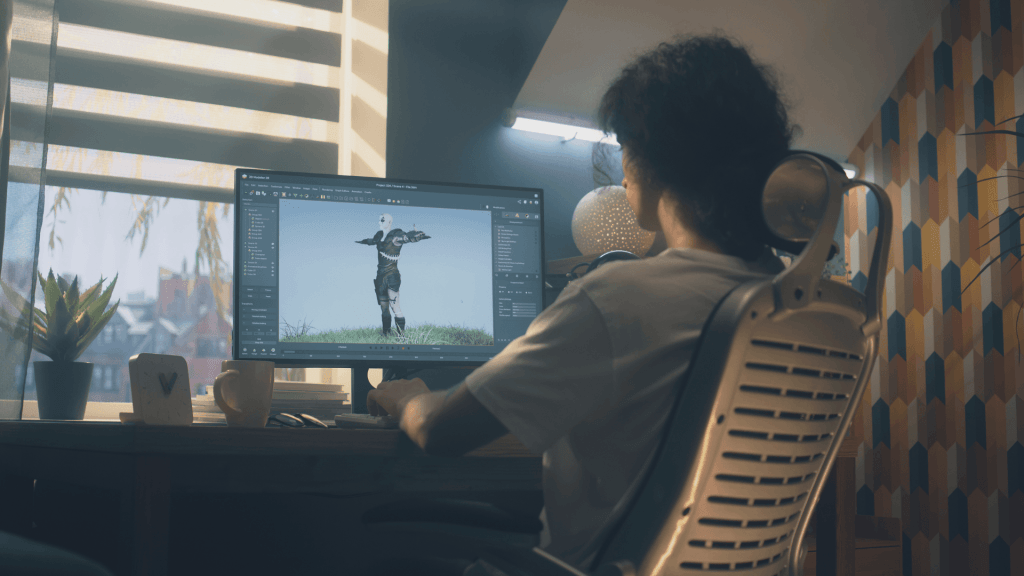

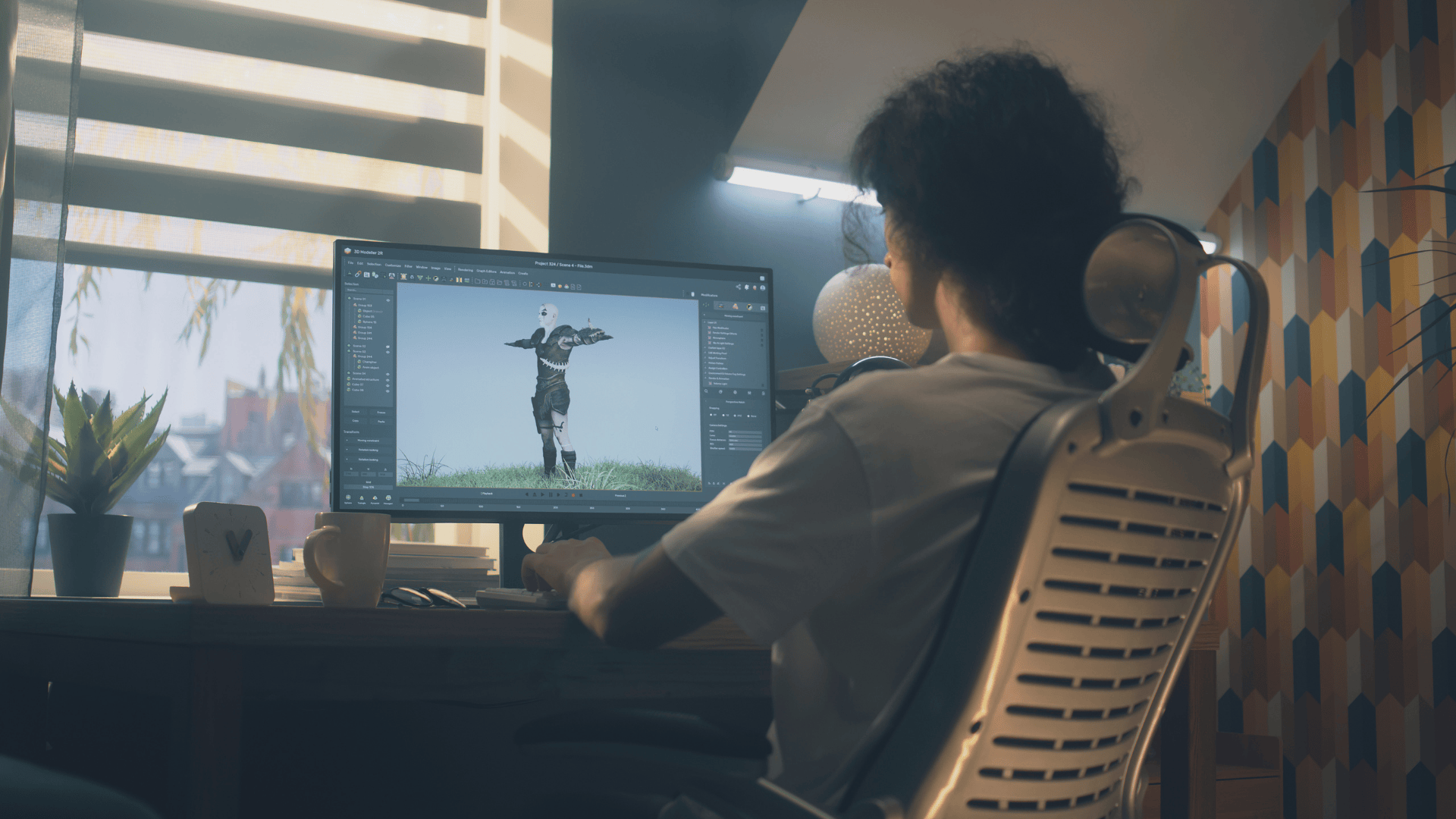

Which software tools are essential for sports animation?

Creating high-quality 3D animation for sports games relies on a suite of specialized software tools:

- 3D Animation & Rigging Software: Autodesk Maya is a staple for character rigging and keyframe animation. It provides powerful rigging tools to set up athletes’ skeletons and muscle deformations, and its graph editor is used to fine-tune motion curves. Maya is widely favored in the industry for character animation, offering robust tools for creating lifelike movements. Similarly, Autodesk MotionBuilder is crucial when working with motion capture data – it’s designed to edit and fine-tune mocap performances and blend animations easily, which is vital for refining sports moves captured from athletes.

- Motion Capture Systems: Hardware and accompanying software such as Vicon or Xsens suits are essential to capture real athlete movement. These come with their own software for recording and initial cleanup. The Rokoko or OptiTrack systems are also popular for more accessible mocap. The captured data is then imported into MotionBuilder or Maya for integration.

- Game Engine Animation Tools: If using engines like Unity or Unreal, their built-in animation systems are key. Unreal Engine’s Animation Blueprint system and Persona editor allow developers to set up complex state machines (for running, jumping, shooting animations transitions) and blendspaces (to blend animations based on parameters like speed or direction). Unity’s Mecanim animation system serves a similar purpose with state machines and blend trees. Mastery of the chosen engine’s animation toolset is essential for implementing the animations into the game and making them interactive.

- Physics Simulation Tools: For secondary motion (cloth, hair) and ragdoll, tools like Nvidia PhysX (often built into engines) are used. E.g., simulating a basketball jersey’s movement or a player’s collision tumble might rely on physics engines integrated into the pipeline.

- Facial Animation Software (if needed): Sports games increasingly show players’ emotions during close-ups. Tools like Faceware or Unreal’s Live Link Face can capture and apply facial animations. Though not as central as body animation for sports, they add realism for celebrations or reactions.

- Others: If the sports game involves crowds or complex cameras, there are tools too – e.g., Havok AI for crowd behaviors or cinematic tools for replay cameras (Unreal’s Sequencer for cutscenes and replays).

In summary, a combination of Maya/MotionBuilder for creation, a solid motion capture pipeline, and engine-specific animation systems forms the backbone of sports game animation development. These tools ensure animators can create, tweak, and implement athlete movements that are believable and responsive in-game.

How do you capture realistic athlete movement (motion capture)?

Motion capture (mocap) is the gold standard for obtaining authentic athlete animations. To capture realistic movement:

- Professional Athletes or Stunt Performers are fitted with mocap suits that have many sensors or reflective markers. These performers actually play out actions on a stage – for a soccer game, one might perform dribbles, shots, tackles; for basketball, various dunks, crossovers, etc. Using athletes ensures the motions have proper weight shifts and technique.

- High-quality Mocap Hardware: Systems like Vicon (optical cameras tracking reflective markers) or Xsens (inertial suits without external cameras) record the 3D positions of each marker/joint at high frame rates. Modern setups can capture even complex interactions (like two players grappling for a ball).

- Capture Full Context: In sports games, interactions are key. Studios now capture 11v11 full soccer matches in mocap or 5v5 in basketball to get realistic multi-player interactions. This yields data like team formations, group celebrations, etc., not just isolated movements.

- Data Cleanup and Enhancement: Raw mocap data can be noisy, so animators use software (MotionBuilder, etc.) to clean and loop animations. They might enhance certain motions, exaggerating a bit for game readability (e.g., making a swing follow-through slightly bigger so it reads well on screen).

- Blending and ML: As seen in FIFA’s HyperMotion, mocap of entire sequences can be fed into machine learning algorithms that then interpolate and generate new animations in real-time. This means rather than animating every possible transition, the game can intelligently blend captured motions to fill gaps – like an algorithm learning how a player adjusts stride to approach a ball and then creating the appropriate animation on the fly.

Using mocap ensures that subtle elements – the way a basketball player shifts weight before jumping or how a tennis player rotates the torso into a swing – are preserved. It saves enormous time compared to animating from scratch and yields authenticity that hand animation can struggle with. The result of good mocap integration is that the virtual athletes move just like real ones, making the game feel grounded and believable. In practice, capturing realistic athlete movement is about combining great source performance (often capturing hundreds of moves, drills, and scenarios) with skilled processing to translate that into the game’s animation system.

What are key considerations for character rigging in sports?

Sports game characters require very robust and flexible rigs because of the range of motion athletes perform. Key considerations include:

- Full Range of Motion: The rig (skeletal setup) must accommodate extreme poses – basketball players reaching high above the rim, soccer players doing splits for saves, etc. Riggers ensure joints have appropriate rotation limits and deformations. Shoulders, hips, and spine setups are crucial so that arms can reach overhead or twist naturally without distorting the model.

- Muscle and Skin Deformation: Modern sports titles might use advanced rigging like muscle simulation or extra bone influences to simulate bulging muscles or tendon movement for extreme exertions. While not always muscle sims, at least carefully painted skin weights and corrective blend shapes are used (for example, a blend shape might engage when an elbow or knee is fully bent to correct volume loss).

- Inverse Kinematics (IK): Sports animations benefit greatly from IK, especially for foot planting and hand interactions. Rigs should have IK controls for legs so feet stay firmly on the ground when needed (preventing sliding) and for arms when characters need to place hands on other players or objects (like dribbling a ball or stiff-arming in football). Having a good IK/FK blend setup is important as animators will switch depending on the action.

- Facial Rigging: Given close-ups (like goal celebrations or arguing with referees), a detailed facial rig might be needed. This includes enough blendshapes or bone controls to express emotion (joy, frustration) convincingly. Facial animations make the athletes feel more alive during cutscenes or slow-motion replays.

- Cloth and Accessories: Rigging considerations extend to things like jerseys, straps, or even hair. Some rigs include proxy bones for clothes (or at least sockets for physics systems to attach). For instance, a long ponytail on a soccer player might be partially rigged to follow head movement, with additional physics simulation.

- Performance & Real-time: The rig should be optimized for the game engine – meaning not too many bones to exceed platform limits (often around 100-150 bones per character in games). Hence riggers might exclude unnecessary bones or use engine features (like Unity’s humanoid rig mapping) for retargeting and optimization. They also consider LOD (simpler rigs at distance).

In sports games, the characters are the focus 100% of the time, so a lot of polish goes into rigging them. A well-considered rig ensures that all captured or hand-crafted animations will look right on the character model, and that the transitions between animations remain smooth (e.g., foot alignment rigs help keep feet from popping between animations). Essentially, if the rig is poorly done, even the best mocap will look off – joints might bend weirdly or limbs may intersect. So investing time in a high-quality rig, specific to athletic motions, is a must for realism and fluid gameplay.

How is physics simulation integrated for authentic gameplay?

Physics simulation plays a pivotal role in sports games for elements that cannot be fully animated due to unpredictability:

- Ball Physics: The trajectory, bounce, and spin of balls (football, basketball, tennis ball, etc.) are governed by physics engines to ensure realism. For example, in a soccer game, the ball’s curve on a free kick or the way it deflects off a post uses real physics formulas (spin Magnus effect, coefficient of restitution for bounces). This adds genuine unpredictability – no two bounces are exactly alike – and players can use physics (like putting backspin for the ball to stop dead) strategically, mirroring real sports physics.

- Collision and Ragdoll: When athletes collide or fall, physics takes over to some degree. Modern games often blend animation with ragdoll physics especially after a hit – so a tackled football player will go into a partial ragdoll that respects momentum, leading to varied fall outcomes. This avoids robotic or repetitive fall animations and increases authenticity as each impact feels unique and appropriate to the collision angle and force.

- Cloth and Equipment: Physics simulations are used for jerseys fluttering, shorts, or even the net reacting when a ball goes through (like a basketball swishing through the net, or a soccer goal net rippling on a goal). These simulations make the world feel tangible. They are often simplified (for performance) but tuned to look right (nets have springiness, cloth has proper weight).

- Friction and foot-planting: While largely animation, some sports games integrate physics for foot interactions – for instance, a player slipping if momentum is too high and changing direction too fast (simulating loss of footing). Racing in sports (like sprinting in Olympics games) might use physics to model inertia and momentum more explicitly rather than purely animation-driven movement.

- Environmental Physics: In games like hockey or any with a ball on ground, the surface properties (ice friction, grass drag when wet) might be simulated. A wet field could make the ball skid more – physics can handle these subtle differences dynamically rather than requiring separate animations.

Integrating physics requires careful balance so that it doesn’t undermine responsiveness. Sports games often use a hybrid approach: core movements are animated for responsiveness, but layered physics give secondary realism. The payoff is significant – you get emergent moments like a ball taking an odd bounce off a divot, or players pile-ups where bodies entangle realistically. Such moments heighten the sense that you’re watching unscripted, live sports. For players, mastering gameplay includes understanding physics (like how far a ball will rebound or how players might stumble after a clash), which deepens the simulation aspect of sports games.

What techniques create dynamic camera angles and replays?

Sports games strive to mimic broadcast TV presentations and also give cinematic flair in replays. Techniques to achieve dynamic camera work include:

- Multiple Camera Presets & AI Selection: Developers set up an array of virtual camera angles – sideline cams, overhead drone cams, helmet cams, crowd-level cams, etc. During gameplay, an AI system can switch cameras contextually (especially in replays or cutscenes) to highlight the action, much like a TV director cuts to the best angle. For instance, if a goal is scored, the game might automatically switch to a goal-line camera to show the ball crossing, then to a follow-cam on the celebrating player.

- Cinematic Camera Behaviors: Cameras are programmed with smoothing, lag, and anticipation to feel human-operated. EA’s sports titles often have “rail-cams” that track players smoothly or “shaky cam” for intense close-ups (without being too jarring). In replays, slow-motion and zooms are used to emphasize the drama – the camera might zoom into the spin on a ball or the strain on a player’s face. Some games use depth of field effects in replays to focus on the subject and blur background, adding a realistic DSLR-camera feel.

- Interactive Replay Systems: Many sports games allow players to manually control replays after a play. These systems give free camera movement, keyframing, and the ability to play/pause or change speed. Users can essentially become the cameraman, finding their favorite angle of a big play. The dynamic angle aspect comes from giving players these tools to explore the action from any perspective.

- Broadcast-style Transitions and Overlays: To enhance realism, games incorporate the same type of transitions (cutaways, slow-mo from multiple angles) you see in broadcasts. For example, a big slam dunk might immediately trigger an automatic replay from a baseline angle, then a second angle from above the rim. Overlaying this with a broadcast scoreboard or instant replay logo sells the effect. In fact, advanced camera orchestration can involve slight camera shake on heavy impacts or updating angle to keep the ball in frame at all times – things actual camera operators do.

- AI-driven Highlights: Some games now even automatically compile highlights at the end of a match (the best goals, big hits, etc.), choosing camera cuts and slow-mo appropriately – essentially auto-editing a reel. Underneath, they use heuristics (e.g., any goal triggers a highlight, any play with a dramatic camera shake event, etc.) to decide.

All these techniques ensure the visual presentation is as thrilling as the gameplay. Dynamic cameras heighten excitement – a wide-angle shot gives tactical overview, while a quick cut to a close-up adds emotional weight. The goal is to replicate the directing of live sports and sometimes even exceed it (games can put cameras where real ones can’t easily go, like directly above a player mid-air for a dunk). When done well, players feel like both participant and spectator, with replay angles letting them appreciate their actions in the most epic way possible.

How do you optimize animation for performance across platforms?

Ensuring smooth animation on all platforms (from high-end PCs to mobile or older consoles) is a critical part of sports game development:

- Level of Detail (LOD) for Animations: Just as with models, animations can have LOD. Complex animations with subtle nuance might be reserved for close-up cameras, whereas distant players could use simplified animations or update at lower frame rates without noticeable loss. For example, spectators in a stadium might animate at a lower fidelity than the athletes on the field.

- Bone Count & Influences: Rig optimization is key – reduce bone count where possible and limit how many bones affect each vertex (often 4 max). This is especially important on mobile platforms. Choosing not to rig fingers for distant characters or NPCs can save performance; on mobile, maybe only key athletes have full finger rigs.

- Bake or Simplify Physics on Lower Platforms: Physics-driven animations (like cloth or hair) can be CPU-intensive. On lower-end platforms, developers might bake these into pre-animated motions or disable some secondary motions entirely to maintain frame rate. They might also reduce the update frequency of ragdoll physics for far away or off-screen characters to save CPU.

- Use of Anim Compression: Modern engines allow compressing animation keyframes (removing redundant keys, lowering precision). For cross-platform, you might keep uncompressed or high-precision animations on powerful platforms, but use heavily compressed versions on mobile. Proper compression can reduce memory and improve cache performance without visible impact if tuned well.

- Adaptive Animation Update: Some engines let you skip animation updates for objects that don’t need it every frame (when off-screen, or if the motion is minor). Exploiting this – e.g., pausing crowd animations when not visible, or updating distant characters’ animations at half-rate – can yield performance wins.

- Testing and Profiling on Each Platform: It’s not a one-size-fits-all; you need to profile the animation system on each target. Perhaps on console the limitation is GPU skinning, while on mobile it’s CPU blend tree evaluation. Knowing the bottleneck, you might choose to turn off certain animation layers or procedural effects on weaker devices.

- GPU Skinning and Multi-threading: Ensuring the game uses GPU skinning (offloading animation vertex deformation to GPU) which is common now, and taking advantage of multi-threaded animation update (many engines can update multiple animation graphs in parallel across CPU cores) improves performance across the board. On newer platforms, this is standard; on older, fallback gracefully if needed.

The goal is to keep the gameplay at a solid frame rate (60fps or more for sports titles, ideally) on each platform. By carefully dialing down animation complexity where it won’t be noticed and leveraging engine optimizations, developers maintain visual quality. For example, a mobile version might reduce crowd animation variety or run fewer active animations concurrently, but the core athlete animations remain smooth. A practical tip is using simpler skeletons or skipping certain animations on low-end: e.g., limit real-time calculated IK on legacy consoles if it’s too heavy, which might mean occasionally a foot might slide a tiny bit, but overall performance is saved. It’s a balance of fidelity vs. efficiency, always testing the threshold of what can be trimmed without compromising the perceptible quality. As one source suggests, reducing skeleton complexity (bones, vertices, constraints) is a direct way to boost mobile performance. By following these practices, the final product is optimized: high-end machines see the game in all its glory, while lower-end devices still get a smooth, playable experience with only minor reductions in animation detail.

What role does facial animation play in sports game realism?

Facial animation, while sometimes overlooked in sports games, plays an increasingly significant role in conveying emotion and personality, thereby enhancing realism. Consider moments in a sports match: a striker’s elation after scoring a goal, a tennis player’s grimace after missing a shot, or a coach yelling instructions from the sideline. If the faces of characters are blank or repetitive, those moments lose impact. Modern sports titles use facial capture and detailed face rigs to ensure athletes exhibit lifelike expressions. For example, in FIFA or NBA2K, you’ll notice players shout with mouths open and genuine anger or joy in their eyes after critical plays. These expressions are often captured from real athletes or voice actors during mocap sessions (capturing facial movements alongside body movements) and then refined by animators.

Facial animation is especially crucial during cutscenes and replays. When the camera zooms in on a player post-play, the subtle eye movements and facial muscles working make the difference between an uncanny mannequin and a believable human character. It adds to narrative immersion too – many sports games have story modes or at least contextual commentary, and seeing a player react believably to a championship win or a bad foul makes the experience feel authentic. In terms of gameplay, players might not consciously focus on faces in the heat of action, but peripherally, a grimace or determined look can register and add to the atmosphere.

Also, beyond players, think of referees or coaches on the sidelines – their reactions are part of the spectacle. A referee sternly frowning and looking disappointed while giving a yellow card, or a coach screaming with veins popping (like real coaches do) can be quite immersive. Facial animation tech like Faceware or Cubic Motion has been employed in some AAA sports games to achieve near-photoreal face movement.

In summary, facial animation humanizes the digital athletes. It reinforces what the body language is telling us. When a player misses an easy goal and buries his face in his hands, the dejection on his face seals the authenticity of that moment. Modern audiences have high expectations (they’re used to detailed faces in general games), so sports games have upped their game – ensuring that iconic player likenesses are not just in appearance but also in expression. The role of facial animation is thus to capture the emotional highs and lows of sports, making victories sweeter and losses more bitter, contributing strongly to a sports game’s realism and emotional impact.

HedgeThink.com is the fund industry’s leading news, research and analysis source for individual and institutional accredited investors and professionals