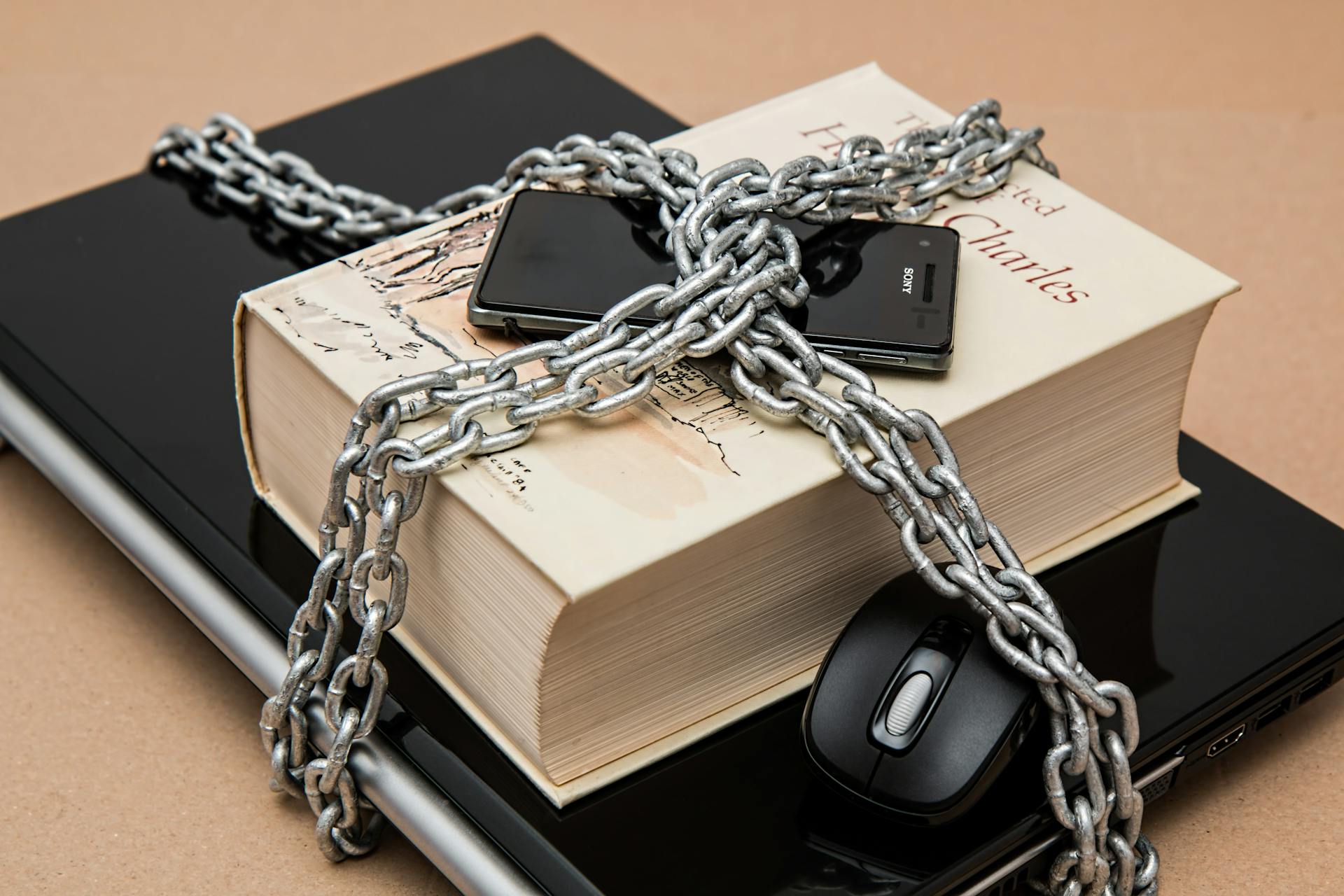

Public institutions face a nonstop stream of cyber risk that can disrupt vital services, expose sensitive records, and erode public trust. Protecting data and infrastructure takes steady investment, repeatable processes, and a playbook that accounts for both people and technology.

The tactics below focus on fundamentals that scale across agencies of different sizes. They balance prevention with response, and they center on visibility, identity, segmentation, and resilience so leaders can adapt as threats evolve.

Embrace Zero Trust As The Default

Every user, device, and workload should be treated as untrusted by default. Zero Trust is not a single product but a set of practices that verify explicitly, enforce least privilege, and monitor continuously. Agencies that adopt this mindset close many common gaps.

A national guidance document emphasized data-centric controls and the need to pair access decisions with strong monitoring, logging, and alerting. It highlighted the role of encryption and classification to protect sensitive records at rest and in transit. That approach helps teams limit blast radius if an account or endpoint is compromised.

Zero Trust works best when rolled out in phases with clear milestones. Start with high-value systems, then extend to shared services and remote access. Use posture checks to keep endpoints compliant before they connect.

Know The Attack Surface And Measure Risk

You cannot protect what you cannot see. Agencies should inventory systems, shadow IT, third-party connections, and external exposures.

This living map drives patching priorities and clarifies which services must be restored first after an incident. Public administrators benefit from understanding the full context of threats facing government agencies today and using that context to set measurable goals. A shared risk picture helps leadership allocate budget to the controls that cut the most risk for the least effort. It keeps the conversation grounded when new tools or mandates appear.

Dashboards that track vulnerabilities, failed logins, phishing reports, and backup health make progress visible. Short, recurring reviews keep attention on the items that matter most and stop work from stalling.

Segment Networks And Isolate Critical Systems

Segmentation limits how far an intruder can move. Break large flat networks into smaller zones that reflect business functions and sensitivity. Deny by default between zones, then open only the pathways that operations require.

Microsegmentation adds another layer inside data centers and the cloud. It enforces policy at the workload level and makes lateral movement noisy and risky for adversaries. Even simple guardrails, like blocking administrative ports between user subnets, cut risk.

Critical systems should live in protected enclaves. Limit remote management, require jump hosts, and watch east-west traffic closely. When combined with Zero Trust controls, these steps make intrusion detection faster and remediation cheaper.

Monitor Continuously And Hunt For Anomalies

Logs tell the story of what happened and who did it. Collect telemetry from endpoints, identity providers, firewalls, cloud control planes, and SaaS apps. Normalize the data so analysts can correlate events quickly.

A federal guidance on data security stressed the need for continuous monitoring backed by alerting workflows and retention that supports investigations. It pointed out that controls should follow the data, not just the device, so sensitive information is tracked wherever it travels. That perspective helps teams spot unusual access to records early.

Threat hunting turns passive logging into active defense. Build repeatable hunts for suspicious patterns like new admin accounts, spikes in failed MFA, or service creation on workstations. Close the loop by tuning detections based on lessons learned.

Prepare For Ransomware Across SLTT And Federal Environments

Ransomware remains a top hazard because it targets services that communities rely on. Agencies should assume disruption is possible and design for quick recovery. That begins with immutable backups, offsite copies, and regular restore testing.

A joint advisory from federal and state partners noted that a specific ransomware family has repeatedly hit state, local, tribal, and territorial governments since 2019. The advisory urged network segmentation, strict RDP controls, and robust backup strategies aligned to recovery time goals. It emphasized user awareness and reporting, so small clues are not missed.

Consider a layered playbook that includes:

- Blocking macros and unsigned scripts by default

- Disabling or tightly restricting remote management tools

- Practicing tabletop exercises that cover ransom decisions and public communication

When possible, pre-stage gold images and configuration baselines. Rapid re-imaging can beat long cleanup windows and return essential services to the public.

Protect Operational Technology And Physical-World Interfaces

Infrastructure like cameras, access control, radios, and sensors often run outside traditional IT. These systems control doors, elevators, and energy, so they deserve equal attention. Start by identifying devices, updating firmware, and changing default credentials.

Place OT on separate networks with limited routes to IT. Use application layer gateways and one-way data flows where possible. Monitor for new devices and unusual traffic that could signal tampering.

Procure equipment with security in mind. Require secure boot, signed updates, and vendor support windows that match your risk tolerance. Document physical procedures so staff know how to operate safely during cyber outages.

Drill Incident Response And Practice Recovery

Response is a team sport that spans IT, legal, communications, and leadership. Clear roles reduce confusion when seconds count. Keep contact trees updated and store plans where they are reachable during a crisis.

Run realistic tabletop exercises that include decisions about evidence preservation, service restoration, and public messaging. Rotate scenarios so teams face credential theft, data exfiltration, and destructive malware. Capture action items after each drill and assign owners.

Backups are only useful if they restore quickly and completely. Test restores on a schedule, verify integrity, and measure time to recovery for core services. These metrics show whether investments are paying off.

Good defense is not one tool or a one-time project. It is a culture that combines clear rules, regular practice, and technology that fits the mission. Agencies that commit to this path make steady gains that the public can feel.

Start with visibility, tighten identity, and segment what matters most. Then keep practicing until recovery is fast and predictable. The result is a safer environment for the people who depend on public services every day.

A dad of 3 kids and a keen writer covering a range of topics such as Internet marketing, SEO and more! When not writing, he’s found behind a drum kit.