The only question on the minds of large firms that offer financial service is exactly how to handle and cope with big data,. As for everyone else, the real question is usually about which vendor to select when you have a limited data? We select software as a service (SAS) because it provides speedy and reliable risk analysis when the data is limited.

The sort of investment that takes place at Larch Lane Advisors is an investment in hedge funds that are still in their early stages. This consisted of those hedge funds that are less than three years old or those that comprise of assets that are less than $400 million. Apart from their fund of fund investments, Larch Lane Advisors have set up almost 26 investments that are seeking hedge funds ever since the company began functioning back in 2001. The aspect of risk management is incorporated into all the various aspects of the process of investment and their key objective is to protect the capital.

Larch Lane explains that, as the director of risk management, he employs the use of Software-as-a-Service (SAS) for the measurement of risk that is market-related. Also, he uses it for assistance with the construction of the portfolio. The SAS moves through little data in order to provide the necessary information needed by the research and portfolio management departments, thus allowing them to make important decisions regarding investments in the future.

He describes this as data going in from one end, some magic taking place in between, and answers instantly appearing at the other end. However, he also points out that this day might suddenly lose its perfection.

This system, according to Lane, is not only efficient but is also perfect; indeed, it is one of the miracles of engineering. Furthermore, he states that it is one of those miracles that he believes scientists will converse over for a long period of time, but only after they have completed the elaborate rituals of initiation.

At a certain stage, things will go haywire and will break down. If one is not able to keep up with the underlying value chain of the data, they will never be able to find out exactly how, where and why things went wrong.

One excellent example is of an organization that kept a tab on the shift in their customer base. On Monday, they take care of their young professionals and by Friday, according to their models, the organization is servicing their elderly clientele.

However, this made no sense at all. Their acquisition and their churn rates proved to be suggestive of nothing, meaning that nothing like this is actually possible and is likely to be nothing more than a mistake. Furthermore, it goes without saying that this did not do much for the credibility of the analytical marketing team.

After a couple of sleepless nights, they were able to identify the problem: one of their data sources had failed to update. This was the issue that had been left to continue and had gone on to ruin their model of segmentation.

However, it was unfortunate that they had only been able to figure out the answer after they had re-validated their entire model, rejected every single imputation, and reviewed all the reports in order to be precise and consistent. Even though they were eventually able to resolve the problem, they would have been able to entirely steer clear of it if they had maintained linage throughout the course of their data value chain.

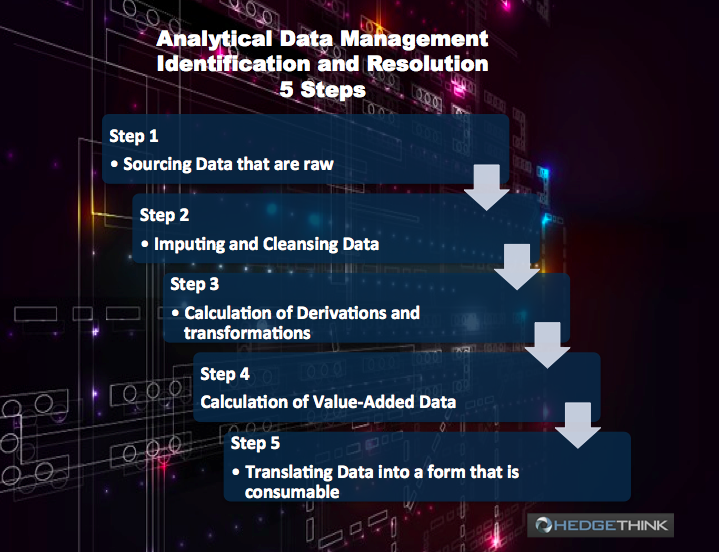

Each process of analysis goes through five steps. The most convenient and hassle-free way of timely identification and resolution within analytical data management is by making sure that every step is segregated and protected.

The five simple steps are as follows:

- Sourcing data that are raw

- Imputing and cleansing data

- Calculation of derivations and transformations

- Calculation of value-added data

- Translating data into a form that is consumable

Segregating and protecting data that is linked with each of these five steps makes it hassle free for the teams maintain, improve and debug their routinely work. By doing this, their risk is reduced and the process itself becomes more efficient, which proves to be extremely advantageous in the future and lessens ramp-up times. However, this does come with increased costs of storage. Still, the non-technical advantages and enhancement in the maintenance outweigh the additional investments that are required to cap the storage.

This does seem like a relatively simple step but it is an absolute lifesaver, especially with regards to the enhancements in the efficiencies. The basic idea is that, with the passage of time, everything breaks down and time is spent on putting it all back together. In reality, the time spent on fixing things is the time that one is losing from the creation of assets and processes that can generate value.

HedgeThink.com is the fund industry’s leading news, research and analysis source for individual and institutional accredited investors and professionals